Planet Python

Last update: April 26, 2025 04:42 AM UTC

April 26, 2025

Ed Crewe

Talk about Cloud Prices at PyConLT 2025

Introduction to Cloud Pricing

I am looking forward to speaking at PyConLT 2025.

My talk is called Cutting the Price of Scraping Cloud Costs

Its been a while (12 years!) since my last Python conference EuroPython Florence 2012, when I spoke as a Django web developer, although I did give a Golang talk at Kubecon USA last year.

I work at EDB, the Postgres company, on our Postgres AI product. The cloud version of which runs across the main cloud providers, AWS, Azure and GCP.

The team I am in handles the identity management and billing components of the product. So whilst I am mainly a Golang micro-service developer, I have dipped my toe into Data Science, having rewritten our Cloud prices ETL using Python & Airflow. The subject of my talk in Lithuania.

Cloud pricing can be surprisingly complex ... and the price lists are not small.

The full price lists for the 3 CSPs together are almost 5 million prices - known as SKUs (Stock Keeping Unit prices)

csp x service x type x tier x region

3 x 200 x 50 x 3 x 50 = 4.5 million

csp = AWS, Azure and GCP

service = vms, k8s, network, load balancer, storage etc.

type = e.g. storage - general purpose E2, N1 ... accelerated A1, A2 multiplied by various property sizes

tier = T-shirt size tiers of usage, ie more use = cheaper rate - small, medium, large

region = us-east-1, us-west-2, af-south-1, etc.

We need to gather all the latest service SKU that our Postgres AI may use and total them up as a cost estimate for when customers are selecting the various options for creating or adding to their installation.

Applying the additional pricing for our product and any private offer discounts for it, as part of this process.

Therefore we needed to build a data pipeline to gather the SKUs and keep them current.

Previously we used a 3rd party kubecost based provider's data, however our usage was not sufficient to justify for paying for this particular cloud service when its free usage expired.

Hence we needed to rewrite our cloud pricing data pipeline. This pipeline is in Apache Airflow but it could equally be in Dagster or any other data pipeline framework.

My talk deals with the wider points around cloud pricing, refactoring a data pipeline and pipeline framework options. But here I want to provide more detail on the data pipeline's Python code, its use of Embedded Postgres and Click, and the benefits for development and testing. Some things I didn't have room for in the talk.

Outline of our use of Data Pipelines

Notably local development mode for running up the pipeline framework locally and doing test runs.

Including some reloading on edit, it can still be a long process, running up a pipeline and then executing the full set of steps, known as a directed acyclic graph, DAG.

One way to improve the DEVX is if the DAG step's code is encapsulated as much as possible per step.

Removing use of shared state where that is viable and allowing individual steps to be separately tested, rapidly, with fixture data. With fast stand up and tear down, of temporary embedded storage.

To avoid shared state persistence across the whole pipeline we use extract transform load (ETL) within each step, rather than across the whole pipeline. This enables functional running and testing of individual steps outside the pipeline.

The Scraper Class

We need a standard scraper class to fetch the cloud prices from each CSP so use an abstract base class.

from abc import ABC

class BaseScraper(ABC):

"""Abstract base class for Scrapers"""

batch = 500

conn = None

unit_map = {"FAIL": ""}

root_url = ""

def map_units(self, entry, key):

"""To standardize naming of units between CSPs"""

return self.unit_map.get(entry.get(key, "FAIL"), entry[key])

def scrape_sku(self):

"""Scrapes prices from CSP bulk JSON API - uses CSP specific methods"""

Pass

def bulk_insert_rows(self, rows):

"""Bulk insert batches of rows - Note that Psycopg >= 3.1 uses pipeline mode"""

query = """INSERT INTO api_price.infra_price VALUES

(%(sku_id)s, %(cloud_provider)s, %(region)s, … %(sku_name)s, %(end_usage_amount)s)"""

with self.conn.cursor() as cur:

cur.executemany(query, rows)

This has 3 common methods:

- mapping units to common ones across all CSP

- Top level scrape sku methods some CSP differences within sub methods called from it

- Bulk insert rows - the main concrete method used by all scrapers

To bulk insert 500 rows per query we use Psycopg 3 pipeline mode - so it can send batch updates again and again without waiting for response.

The database update against local embedded Postgres is faster than the time to scrape the remote web site SKUs.

The largest part of the Extract is done at this point. Rather than loading all 5 million SKU as we did with the kubecost data dump, to query out the 120 thousand for our product. Scraping the sources directly we only need to ingest those 120k SKU. Which saves handling 97.6% of the data!

So the resultant speed is sufficient although not as performant as pg_dump loading which uses COPY.

Unfortunately Python Psycopg is significantly slower when using cursor.copy and it mitigated against using zipped up Postgres dumps. Hence all the data artefact creation and loading simply uses the pg_dump utility wrapped as a Python shell command.

There is no need to use Python here when there is the tried and tested C based pg_dump utility for it that ensures compatibility outside our pipeline. Later version pg_dump can always handle earlier Postgres dumps.

We don't need to retain a long history of artefacts, since it is public data and never needs to be reverted.

This allows us a low retention level, cleaning out most of the old dumps on creation of a new one. So any storage saving on compression is negligible.

Therefore we avoid pg_dump compression, since it can be significantly slower, especially if the data already contains compressed blobs. Plain SQL COPY also allows for data inspection if required - eg grep for a SKU, when debugging why a price may be missing.

class BaseScraper(ABC):

Postgres Embedded wrapped with Go

Python doesn’t have maintained wrapper for Embedded Postgres, sadly project https://github.com/Simulmedia/pyembedpg is abandoned 😢

Hence use the most up to date wrapper from Go. Running the Go binary via a Python shell command.It still lags behind by a version of Postgres, so its on Postgres 16 rather than latest 17.But for the purposes of embedded use that is irrelevant.

By using separate temporary Postgres per step we can save a dumped SQL artefact at the end of a step and need no data dependency between steps, meaning individual step retry in parallel, just works.

The performance of localhost dump to socket is also superior.By processing everything in the same (if embedded) version of our final target database as the Cloud Price, Go micro-service, we remove any SQL compatibility issues and ensure full Postgresql functionality is available.

The final data artefacts will be loaded to a Postgres cluster price schema micro-service running on CloudNativePG

The performance of localhost dump to socket is also superior.

The final data artefacts will be loaded to a Postgres cluster price schema micro-service running on CloudNativePG

Use a Click wrapper with Tests

The click package provides all the functionality for our pipeline..

> pscraper -h

Usage: pscraper [OPTIONS] COMMAND [ARGS]...

price-scraper: python web scraping of CSP prices for api-price

Options:

-h, --help Show this message and exit.

Commands:

awsscrape Scrape prices from AWS

azurescrape Scrape prices from Azure

delold Delete old blob storage files, default all over 12 weeks old are deleted

gcpscrape Scrape prices from GCP - set env GCP_BILLING_KEY

pgdump Dump postgres file and upload to cloud storage - set env STORAGE_KEY

> pscraper pgdump --port 5377 --file price.sql

pgembed Run up local embeddedPG on a random port for tests

> pscraper pgembed

pgload Load schema to local embedded postgres for testing

> pscraper pgload --port 5377 --file price.sql

This caters for developing the step code entirely outside the pipeline for development and debug.

We can run pgembed to create a local db, pgload to add the price schema. Then run individual scrapes from a pipenv pip install -e version of the the price scraper package.

For unit testing we can create a mock response object for the data scrapers that returns different fixture payloads based on the query and monkeypatch it in. This allows us to functionally test the whole scrape and data artefact creation ETL cycle as unit functional tests.

Any issues with source data changes can be replicated via a fixture for regression tests.

class MockResponse:

"""Fake to return fixture value of requests.get() for testing scrape parsing"""

name = "Mock User" payload = {} content = "" status_code = 200 url = "http://mock_url"

def __init__(self, payload={}, url="http://mock_url"): self.url = url self.payload = payload self.content = str(payload)

def json(self): return self.payload

def mock_aws_get(url, **kwargs): """Return the fixture JSON that matches the URL used"""

for key, fix in fixtures.items(): if key in url: return MockResponse(payload=fix, url=url) return MockResponse()

class TestAWSScrape(TestCase): """Tests for the 'pscraper awsscrape' command"""

def setUpClass(): """Simple monkeypatch in mock handlers for all tests in the class""" psycopg.connect = MockConn requests.get = mock_aws_get # confirm that requests is patched hence returns short fixture of JSON from the AWS URLs result = requests.get("{}/AmazonS3/current/index.json".format(ROOT)) assert len(result.json().keys()) > 5 and len(result.content) < 2000

A simple DAG with Soda Data validation

The click commands for each DAG are imported at the top, one for the scrape and one for postgres embedded, the DAG just becomes a wrapper to run them, adding Soda data validation of the scraped data ...

def scrape_azure():

"""Scrape Azure via API public json web pages"""

from price_scraper.commands import azurescrape, pgembed

folder, port = setup_pg_db(PORT)

error = azurescrape.run_azure_scrape(port, HOST)

if not error:

error = csp_dump(port, "azure")

if error:

pgembed.teardown_pg_embed(folder)

notify_slack("azure", error)

raise AirflowFailException(error)

data_test = SodaScanOperator(

dag=dag,

task_id="data_test",

data_sources=[

{

"data_source_name": "embedpg",

"soda_config_path": "price-scraper/soda/configuration_azure.yml",

}

],

soda_cl_path="price-scraper/soda/price_azure_checks.yml",

)

data_test.execute(dict())

pgembed.teardown_pg_embed(folder)

"""Scrape Azure via API public json web pages"""

from price_scraper.commands import azurescrape, pgembed

folder, port = setup_pg_db(PORT)

error = azurescrape.run_azure_scrape(port, HOST)

if not error:

error = csp_dump(port, "azure")

if error:

pgembed.teardown_pg_embed(folder)

notify_slack("azure", error)

raise AirflowFailException(error)

data_test = SodaScanOperator(

dag=dag,

task_id="data_test",

data_sources=[

{

"data_source_name": "embedpg",

"soda_config_path": "price-scraper/soda/configuration_azure.yml",

}

],

soda_cl_path="price-scraper/soda/price_azure_checks.yml",

)

data_test.execute(dict())

pgembed.teardown_pg_embed(folder)

We setup a new Embedded Postgres (takes a few seconds) and then scrape directly to it.

We then use the SodaScanOperator to check the data we have scraped, if there is no error we dump to blob storage otherwise notify Slack with the error and raise it ending the DAG

Our Soda tests check that the number of and prices are in the ranges that they should be for each service. We also check we have the amount of tiered rates that we expect. We expect over 10 starting usage rates and over 3000 specific tiered prices.

If the Soda tests pass, we dump to cloud storage and teardown temporary Postgres. A final step aggregates together each steps data. We save the money and maintenance of running a persistent database cluster in the cloud for our pipeline.

April 25, 2025

Test and Code

The role of AI in software testing - Anthony Shaw

AI is helping people write code.

Tests are one of those things that some people don't like to write.

Can AI play a role in creating automated software tests?

Well, yes. But it's a nuanced yes.

Anthony Shaw comes on the show to discuss the topic and try to get AI to write some test for my very own cards project.

We discuss:

- The promise of AI writing your tests for you

- Downsides to not writing tests yourself

- Bad ways to generate tests

- Good ways to ask AI for help in writing tests

- Tricks to get better results while using copilot and other AI tools

Links:

- The cards project

- A video version of this discussion: Should AI write tests?

Sponsored by:

- Porkbun -- named the #1 domain registrar by USA Today from 2023 to 2025!

- Get a .app or.dev domain name for only $5.99 first year.

Learn pytest:

- The Complete pytest course is now a bundle, with each part available separately.

- pytest Primary Power teaches the super powers of pytest that you need to learn to use pytest effectively.

- Using pytest with Projects has lots of "when you need it" sections like debugging failed tests, mocking, testing strategy, and CI

- Then pytest Booster Rockets can help with advanced parametrization and building plugins.

- Whether you need to get started with pytest today, or want to power up your pytest skills, PythonTest has a course for you.

Everyday Superpowers

Event Sourcing: Reactivity Without the React Overhead

This is the second entry in a five-part series about event sourcing:

- Why I Finally Embraced Event Sourcing—And Why You Should Too

- What is event sourcing and why you should care

- Preventing painful coupling

- Reactivity Without the React Overhead (this page)

- Get started with event sourcing today

In this post, I’ll share some things I’ve enjoyed about event sourcing since adopting it.

I'll start by saying that one of the ideas I love about some of today’s JavaScript frameworks is that the HTML of the page is a functional result of the data on the page. If that data changes, the JS framework will automatically change the affected HTML.

This feature enables some impressive user experiences, and it’s especially fun to see an edit in one part of the page immediately affect another.

I’m finding this helpful pattern to remember as I’ve been working with event sourcing. Any event can trigger updates to any number of database tables, caches, and UIs, and it's fun to see that reactivity server-side.

React is (mostly) a front-end framework. It's concern is to update the in-browser HTML after data changes.

In a way, you can say event-driven microservices are similar. One part of the system publishes an event without knowing who will listen, and other parts kick off their process with the data coming in from the event.

One of the things that has caught me by surprise about event sourcing is how I get similar benefits of an event-driven microservice architecture in a monolith, while keeping complexity low.

At one time, the project I'm working on was a microservice architecture with six different Python applications. With a vertically sliced, event-sourced architecture, we could make it one.[one]{It's currently two, since the architect feels better that way, but it can easily be one.}

This project processes files through several stages. It's so fun to see this application work. Like an event-driven microservice, the command creates an event when a document enters the system.

However, instead of going to an external PubSub queue, this event gets saved to the event store and then to an internal message bus. The code that created the event doesn't know or care who's listening, and any number of functions can listen in.

In this case, several functions listen for the document created event. One kicks off the first step of the processing. Another makes a new entry for the status page. A third creates an entry in a table for a slice that helps us keep track of our SLAs.

Once the first step finishes, another event is raised. In turn, a few other functions are run. One updates the status info, and another begins the next processing step.

If something went wrong with the first step, we'll save a different event. In reaction to this event, we have a function that updates the status screen, another that adds info to an admin screen to help diagnose what went wrong, a third that notifies an external team that consumes the result of this workflow, and a fourth that will determine whether to retry the process.

Keeping the complexity in check

This sounds incredibly complicated, and in some ways it is. There are a lot of small moving parts. But they're all visible either through looking at the event modeling diagram or leveraging the IDE to see where a type of event is used.

This is similar to having an event-driven microservice, but it all lives in a decoupled monolith[dcmono]{Decoupled monolith?! Who would have guessed those words would be used together?} and is easily deployable.

The most painful part of creating this app has been debugging issues that span the interactivity between the two services. Adding additional services dramatically increases complexity.

This is not to say that you shouldn't use microservices. I love the idea of implementing slices in different languages to better meet specific slices' needs. But having most of the code in one code base and deploy target is nice.

I'm also thrilled that complexity doesn't grow as the project ages. Because of the decoupled nature of the vertical slices, adding new functionality will not make the code much more complicated. Each slice is isolated, and there are only a few patterns to master.

When it's time to start working on a new piece of functionality, I'll create its folder and examine where my data comes from. Do I need to subscribe to events or pull from a read model? Then, I check to see what events my slice needs to publish. Once those are in place, it's all about implementing the business logic.

Rinse and repeat.

But part of an excellent service is a great user experience, and I love how this reactivity is not just limited to the back end.

I value a great user experience, so early in the project, I looked for when a live-updating view would greatly benefit the user.

The first one I did was the status view I discussed in previous posts. When a document enters our system, it appears in the table like this:

- html

Document ID Status Last Updated Duration 1542-example-94834 0% done 5 seconds ago 5 seconds - alignment

- normal

When one step as been finished, the UI looks like this:

- html

Document ID Status Last Updated Duration 1542-example-94834 25% done 0 seconds ago 10 seconds - alignment

- normal

The way I implemented this is to have a function that subscribes to the events that would change the UI and update a database table. Something like this:

StatusEvent = typing.Union[

DocumentCreated,

Step1Finished,

Step1Failed,

...

]

def on_status_updates(event: StatusEvent):

if isinstance(event, DocumentCreated):

...

elif isinstance(event, Step1Finished):

db.document(event.document_id).update({

‘percent_done’: 25,

‘last_updated’: event.stored_at,

})

...This project uses Google’s Firestore as its primary database, and it has a feature allowing you to subscribe to database changes.[firebase]{Believe it or not, I'm not using the internal bus to update the UI. That'll wait until the next project.}

When a user loads this page, we use HTMX to open a server-sent events connection to code that subscribes to changes in the status database. Something like this[this]{Complexity was removed to improve understandability. I'm working on making this aspect more understandable for a future blog post.}:

def on_database_update(changes):

now = datetime.now(tz=UTC)

template = templates.template('document_status_row.jinja')

return HTTPStream(

template.render_async(

context=(document=changes, last_updated=now)

)

)With that, any time an entry in the database changes, an updated table row gets sent to the browser as HTML and HTMX either updates an existing row or inserts the new one into the table.[cav]{This isn't unique to HTMX. Frameworks like data-star, unpoly, and fixi can do the same} All this without setting up a JavaScript build pipeline or WebSockets infrastructure.

One final aspect of event sourcing I've enjoyed through this project is the ability to decide what to do based on an item's history.

I mentioned above that an external team wants to be notified about specific conditions.

When I was tasked to implement this, the person giving it to me felt a little sorry, as they suspected this had complexity hiding below the surface.

After talking with the external team, they wanted up to two notifications for every document: one notification if that document completed every step, or one notification if the document failed any step twice.

I handled the first case similarly to this:

def document_just_completed_all_steps(event_names: list[DomainEvent]) -> bool:

return (

event_names.count('Step4Finished') == 1 and

event_names[-1].name == 'Step4Finished'

)

def should_notify(event: DomainEvent, container: svcs.Container) -> bool:

event_store = container.get(EventStore)

event_names = [

event.name for event

in event_store.get_event_stream(event.entity_id)

]

if document_just_completed_all_steps(event_names):

return True

return did_document_fail_retry_for_the_first_time(event_names)Thankfully, with event sourcing and the excellent svcs framework[hynek]{Thanks, Hynek!}, I have access to every event that happened to that document.

I used that list of events to ensure that there was only one instance of the final event, and that it was the last event in the sequence.

If this sounds like magic, it’s not. It’s just good design and a new way of thinking about change. In the next post, I’ll show you exactly how to dip your toe into event sourcing.

Read more...

Awesome Python Applications

DollarDollar Bill Y'all

DollarDollar Bill Y'all: Self-hosted money management and expense splitting web service.

Links:

Beaver Habits

aider

Python GUIs

Building a Currency Converter Application using Tkinter — Convert between currencies with ease

In this tutorial, you'll create a currency converter application with Python and Tkinter. The app will allow the users to select the source currency, choose the target currency, and input the amount to convert. The application will use real-time exchange rates to convert from the source to target currency, providing accurate and up-to-date conversions.

FIXME: Add the demo video here... Currency converter demo

Through this project you'll gain hands-on experience working with Tkinter's GUI elements, handling user input, and interacting with an external API. By the end of the tutorial, you'll have a functional currency converter app and have learnt practical skills you can apply to your own Python & Tkinter projects.

Setting Up the Environment

We'll start by setting up a working environment for the project. We are using Tkinter for this project, which is included by default in most Python installations, so we don't need to install that.

If you're on Linux you may need to install it. See the Linux Tkinter installation instructions.

We'll also be using the requests library to make HTTP requests to the Exchange Rate API which we can install from PyPi using pip. We also want to create a folder to hold our project files including our Python script and images.

Below are the instructions to create a folder for our project called currency_converter, set up a virtual environment, activate it and install requests into that environment.

- macOS

- Windows

- Linux

$ mkdir currency_converter/

$ cd currency_converter

$ python -m venv venv

$ source venv/bin/activate

(venv)$ pip install requests

> mkdir currency_converter/

> cd currency_converter

> python -m venv venv

> venv\Scripts\activate.bat

(venv)> pip install requests

$ mkdir currency_converter/

$ cd currency_converter

$ python -m venv venv

$ source venv/bin/activate

(venv)$ pip install requests

We will use the free Exchange Rate API to access real-time exchange rate data. It offers various endpoints that allow users to retrieve exchange rate information for different currency pairs, convert currency amounts from one currency to another, and perform other related operations. You'll have to sign up on the API page to be able to run the app.

Setting Up the Project Structure

Now that we've created the virtual environment and installed the required third-party libraries, we can set up the project structure.

Add a folder named images to the root of your project.

The images/ subfolder is where we will place the app's logo. Our application code will go in the root folder, named currency_converter.py.

currency_converter/

&boxv

&boxvr&boxh&boxh images/

&boxv &boxur&boxh&boxh logo.png

&boxv

&boxur&boxh&boxh currency_converter.py

Getting Started with our Application

Now we have the project folder setup and requirements installed, we can start building our app. Create a new file called currency_converter.py at the root of the project folder and open this in your editor.

We'll start by adding the imports we need for our project, and building a basic window which will hold our application UI.

import os

import sys

import tkinter as tk

import tkinter.ttk as ttk

from tkinter import messagebox

import requests

class CurrencyConverterApp(tk.Tk):

def __init__(self):

super().__init__()

self.geometry("500x450+300+150")

self.title("Currency Converter")

self.resizable(width=0, height=0)

if __name__ == "__main__":

app = CurrencyConverterApp()

app.mainloop()

In the above code, we import the os and sys modules from the Python standard library. Then, we import the tkinter package as tk. This shorthand is typically used with Tkinter to save repeatedly typing the full name. We also import the tkinter.ttk package which gives us access to Tkinter's themed widgets, which looker nicer than the defaults. We also import Tkinter's messagebox module for creating pop up dialogs. Finally, we import the requests library to make HTTP requests to the API so that we can get up-to-date exchange rates and convert currencies.

We start by creating the application's root window, which in Tkinter also works as the application container. To do this we create a class called CurrencyConverterApp, which inherits from the tk.Tk class. On this class we add a custom __init__() method, which is called to initialize the object, where we set up the window's attributes.

To set the window's width, height, and position, we use the geometry() method. For the window's title, we use title(). Finally, we use resizable() with the width and height set to 0 to make the window unresizable.

With the main window class created, we then add the code to instantiate the class -- creating an instance of the CurrencyConverterApp -- and start up the main loop for the application. The main loop is the event loop which handles user input events from the keyboard and mouse and passes them to the widgets.

When you run this code, you see the following window on your screen:

Currency converter's main window

Currency converter's main window

There's not much to see for now: our currency converter doesn't have a functional GUI. It's just a plain window with an appropriate title and size.

Now let's create the app's GUI.

Creating the Currency Converter's GUI

To create the app's GUI, let's begin adding the widgets to the main window. We will add the following widgets:

- The app's logo

- Two labels and two associated combo boxes for selecting the source and target currency

- A label for the amount to convert and an associated entry field

- A label for displaying the conversion results

- A button to run the conversion

First lets add the logo to our application.

We'll be including small snippets of the code as we build it. But the full code is shown below, to make sure you don't get mixed up.

# ...

class CurrencyConverterApp(tk.Tk):

# ...

def build_gui(self):

self.logo = tk.PhotoImage(file="images/logo.png")

tk.Label(self, image=self.logo).pack()

In this code snippet, we first create build_gui() method to define all the widgets we need in our GUI. Inside the method, we load an image using the tk.PhotoImage() class.

Then, we create a label to display the image using the ttk.Label() class, which takes self and image as arguments. Finally, we position the label in the main window using the pack() method, which is a geometry manager in Tkinter.

To use the build_gui() method, we need to call it. To do this, add the call to self.build_gui() to the end of the __init__() method. That gives us the

following code.

import os

import sys

import tkinter as tk

import tkinter.ttk as ttk

from tkinter import messagebox

import requests

class CurrencyConverterApp(tk.Tk):

def __init__(self):

super().__init__()

self.geometry("500x450+300+150")

self.title("Currency Converter")

self.resizable(width=0, height=0)

def build_gui(self):

self.logo = tk.PhotoImage(file="images/logo.png")

tk.Label(self, image=self.logo).pack()

self.build_gui()

if __name__ == "__main__":

app = CurrencyConverterApp()

app.mainloop()

Go ahead and run the app. You'll get an output like the following:

Currency converter window with logo

Currency converter window with logo

Now we can see something! We'll continue adding widgets to build up our UI.First, we will create a frame to position the widgets. Below the logo code we added in to the build_gui() method, add a frame:

# ...

class CurrencyConverterApp(tk.Tk):

# ...

def build_gui(self):

self.logo = tk.PhotoImage(file="images/logo.png")

tk.Label(self, image=self.logo).pack()

frame = tk.Frame(self)

frame.pack()

Here, we create a frame using the tk.Frame class. It takes self as an argument because the current window is the frame's parent. To position the frame inside the main window, we use the pack() method.

With the frame in place, we can add some more widgets. Below is the code for populating the frame:

# ...

class CurrencyConverterApp(tk.Tk):

# ...

def build_gui(self):

# ...

from_label = ttk.Label(frame, text="From:")

from_label.grid(row=0, column=0, padx=5, pady=5, sticky=tk.W)

to_label = ttk.Label(frame, text="To:")

to_label.grid(row=0, column=1, padx=5, pady=5, sticky=tk.W)

self.from_combo = ttk.Combobox(frame)

self.from_combo.grid(row=1, column=0, padx=5, pady=5)

self.to_combo = ttk.Combobox(frame)

self.to_combo.grid(row=1, column=1, padx=5, pady=5)

In this code, we create two labels using the ttk.Label() class. We position them using the grid() method. Then, we create the two combo boxes using the ttk.Combobox() class, and position them both in the frame using the grid() method again.

Note that we've positioned the labels in the first row of the frame while the combo boxes are in the second row. The GUI now will look something like this:

Currency converter's GUI

Currency converter's GUI

Great! Your app's GUI now has the widgets for selecting the source and target currencies. Let's now add the last four widgets. Below is the code for this:

# ...

class CurrencyConverterApp(tk.Tk):

# ...

def build_gui(self):

# ...

amount_label = ttk.Label(frame, text="Amount:")

amount_label.grid(row=2, column=0, padx=5, pady=5, sticky=tk.W)

self.amount_entry = ttk.Entry(frame)

self.amount_entry.insert(0, "1.00")

self.amount_entry.grid(

row=3, column=0, columnspan=2, padx=5, pady=5, sticky=tk.W + tk.E

)

self.result_label = ttk.Label(font=("Arial", 20, "bold"))

self.result_label.pack()

convert_button = ttk.Button(self, text="Convert", width=20)

convert_button.pack()

In this code snippet, we add two labels using the ttk.Label() class as usual. Then, we create the entry field using the ttk.Entry() class. Next, we add the Convert button using the ttk.Button() class. All these widgets must go inside the frame object.

Note that we've positioned the amount_label and the amount_entry using the grid() method. In contrast, we've used the pack() method to place the result_label and convert_button.

The complete current code is shown below.

import os

import sys

import tkinter as tk

import tkinter.ttk as ttk

from tkinter import messagebox

import requests

class CurrencyConverterApp(tk.Tk):

def __init__(self):

super().__init__()

self.geometry("500x450+300+150")

self.title("Currency Converter")

self.resizable(width=0, height=0)

self.build_gui()

def build_gui(self):

self.logo = tk.PhotoImage(file="images/logo.png")

tk.Label(self, image=self.logo).pack()

frame = tk.Frame(self)

frame.pack()

from_label = ttk.Label(frame, text="From:")

from_label.grid(row=0, column=0, padx=5, pady=5, sticky=tk.W)

to_label = ttk.Label(frame, text="To:")

to_label.grid(row=0, column=1, padx=5, pady=5, sticky=tk.W)

self.from_combo = ttk.Combobox(frame)

self.from_combo.grid(row=1, column=0, padx=5, pady=5)

self.to_combo = ttk.Combobox(frame)

self.to_combo.grid(row=1, column=1, padx=5, pady=5)

amount_label = ttk.Label(frame, text="Amount:")

amount_label.grid(row=2, column=0, padx=5, pady=5, sticky=tk.W)

self.amount_entry = ttk.Entry(frame)

self.amount_entry.insert(0, "1.00")

self.amount_entry.grid(

row=3, column=0, columnspan=2, padx=5, pady=5, sticky=tk.W + tk.E

)

self.result_label = ttk.Label(font=("Arial", 20, "bold"))

self.result_label.pack()

convert_button = ttk.Button(self, text="Convert", width=20)

convert_button.pack()

if __name__ == "__main__":

app = CurrencyConverterApp()

app.mainloop()

Run this and you'll see the following window.

Currency converter's GUI

Currency converter's GUI

This is looking good now. The app's GUI is functionally complete. Even though you can't see the label showing the conversion result, this label is there and will be visible once we add something to it.

Implementing the Convert Currency Functionality

As mentioned, we will be using the Exchange Rate API to get our live currency data. To request data from the the API we need an API key. You can get one by signing up and creating an account. This is free if you only need daily rates (fine for our app).

Exchange Rate API Sign-up Page

Exchange Rate API Sign-up Page

If you accept the terms, you will receive your API key via the email address you provided, or you can go to the dashboard, where you will see your API key as follows:

Exchange Rate API Key

Exchange Rate API Key

Now that we have the API key, let's implement the currency conversion functionality. First, we will add the API key as an environment variable.

On Windows, open the terminal as an administrator and run the command below. Note that you must replace the "your_api_key" part with the actual API key:

- Windows

- Windows Powershell

- macOS

- Linux

> setx API_KEY "your_api_key"

PS> setx API_KEY "your_api_key"

$ export API_KEY="your_api_key"

$ export API_KEY="your_api_key"

Now, get back to your code editor. Below the imports, paste the following code:

# ...

import requests

API_KEY = os.getenv("API_KEY")

if API_KEY is None:

messagebox.showerror(

"API Key Error", "API_KEY environment variable is not set."

)

sys.exit(1)

API_URL = f"https://v6.exchangerate-api.com/v6/{API_KEY}/"

# ...

Here, we retrieve the API key from the environment variable (that we just set) using the os.getenv() function. Using an if statement, we check whether the key was set. If not, we issue an error message and terminate the app's execution using the sys.exit() function. Then, we set up the URL to get the latest currencies.

Run the code now -- in the same shell where you set the environment variable. If you see the error dialog then you know that the environment variable has not been set -- check the instructions again, and make sure the variable is set correctly in the shell where you are running the code. If you see the application as normal, then everything is good!

The error shown when API_KEY is not set correctly in the environment.

The error shown when API_KEY is not set correctly in the environment.

Interacting with the API

Now we have the API_KEY set up correctly we move on to interacting with the API itself. To do this we will create a method for getting all the currencies. Let's call it get_currencies(). Below the build_gui() method, add this new method:

# ...

class CurrencyConverterApp(tk.Tk):

# ...

def build_gui(self):

# ...

def get_currencies(self):

response = requests.get(f"{API_URL}/latest/USD")

data = response.json()

return list(data["conversion_rates"])

The method above sends a GET request to the given URL. We convert the received JSON response to Python objects using the json() method. Finally, we convert the conversion rates that come in the response to a Python list.

We can populate the two combo boxes using the get_currencies() method. Add the following code to the bottom of the build_gui method.

# ...

class CurrencyConverterApp(tk.Tk):

# ...

def build_gui(self):

# ...

currencies = self.get_currencies()

self.from_combo["values"] = currencies

self.from_combo.current(0)

self.to_combo["values"] = currencies

self.to_combo.current(0)

This calls the get_currencies method to get the available currencies, and then populates the two combo boxes with the returned list.

If you run the application now, you'll see that the combo-boxes now contain the currencies returned from the API. Note that we're setting the default item to USD, which is the first currency in the list.

Populating the From currency combo box

Populating the From currency combo box

Populating the To currency combo box

Populating the To currency combo box

Handling the Currency Conversion

The final step is to implement the actual currency conversion, using the values returned from the API. To do this we will create a method to handle this. Add new method called convert() to the bottom of our CurrencyConverterApp class.

# ...

class CurrencyConverterApp(tk.Tk):

# ...

def convert(self):

src = self.from_combo.get()

dest = self.to_combo.get()

amount = self.amount_entry.get()

response = requests.get(f"{API_URL}/pair/{src}/{dest}/{amount}").json()

result = response["conversion_result"]

self.result_label.config(text=f"{amount} {src} = {result} {dest}")

In the first three lines, we get input data from the from_combo and to_combo combo boxes and the amount_entry field using the get() method of each widget. The from_combo combo box data is named src, the to_combo combo box data is named dest, and the amount_entry field data is named amount.

To get the conversion between currencies, we make a GET request to the API using a URL constructed using the input data. The result returned from the API is again in JSON format, which we convert to a Python dictionary by calling .json(). We take the "conversion_result" from the response and use this to update the result label with the conversion result.

The final step is to hook our convert() method up to a button so we can trigger it. To do this, we will add the command argument to the button's definition. The value for this argument will be assigned the convert method object without the parentheses.

Here's how the button code will look after the update:

convert_button = ttk.Button(

self,

text="Convert",

width=20,

command=self.convert,

)

This code binds the button to the convert() method. Now, when you click the Convert button, this method will run.

That's it! With these final touches, your currency converter application is complete. The full final code is shown below.

import os

import sys

import tkinter as tk

import tkinter.ttk as ttk

from tkinter import messagebox

import requests

API_KEY = os.getenv("API_KEY")

if API_KEY is None:

messagebox.showerror("API Key Error", "API_KEY environment variable is not set.")

sys.exit(1)

API_URL = f"https://v6.exchangerate-api.com/v6/{API_KEY}/"

class CurrencyConverterApp(tk.Tk):

def __init__(self):

super().__init__()

self.geometry("500x450+300+150")

self.title("Currency Converter")

self.resizable(width=0, height=0)

self.build_gui()

def build_gui(self):

self.logo = tk.PhotoImage(file="images/logo.png")

tk.Label(self, image=self.logo).pack()

frame = tk.Frame(self)

frame.pack()

from_label = ttk.Label(frame, text="From:")

from_label.grid(row=0, column=0, padx=5, pady=5, sticky=tk.W)

to_label = ttk.Label(frame, text="To:")

to_label.grid(row=0, column=1, padx=5, pady=5, sticky=tk.W)

self.from_combo = ttk.Combobox(frame)

self.from_combo.grid(row=1, column=0, padx=5, pady=5)

self.to_combo = ttk.Combobox(frame)

self.to_combo.grid(row=1, column=1, padx=5, pady=5)

amount_label = ttk.Label(frame, text="Amount:")

amount_label.grid(row=2, column=0, padx=5, pady=5, sticky=tk.W)

self.amount_entry = ttk.Entry(frame)

self.amount_entry.insert(0, "1.00")

self.amount_entry.grid(

row=3, column=0, columnspan=2, padx=5, pady=5, sticky=tk.W + tk.E

)

self.result_label = ttk.Label(font=("Arial", 20, "bold"))

self.result_label.pack()

convert_button = ttk.Button(

self, text="Convert", width=20, command=self.convert

)

convert_button.pack()

currencies = self.get_currencies()

self.from_combo["values"] = currencies

self.from_combo.current(0)

self.to_combo["values"] = currencies

self.to_combo.current(0)

def get_currencies(self):

response = requests.get(f"{API_URL}/latest/USD")

data = response.json()

return list(data["conversion_rates"])

def convert(self):

src = self.from_combo.get()

dest = self.to_combo.get()

amount = self.amount_entry.get()

response = requests.get(f"{API_URL}/pair/{src}/{dest}/{amount}").json()

result = response["conversion_result"]

self.result_label.config(text=f"{amount} {src} = {result} {dest}")

if __name__ == "__main__":

app = CurrencyConverterApp()

app.mainloop()

Run the final code and you will be able to convert amounts between any of the supported currencies. For example, select USD and EUR in the from and to combo boxes and enter a conversion amount of 100. The application will call the API and update the label with the result of the conversion, like follows:

Running currency converter app

Running currency converter app

Conclusion

Well done! You've built a functional currency converter application with Python & Tkinter. You've learnt the basics of building up a UI using Tkinter's widgets and layouts, how to use APIs to fill widgets with values and perform operations in response to user input.

If you want to take it further, think about some ways that you could improve the usability or extend the functionality of this application:

- Do you have some conversions you do regularly? Add a way to quickly apply "favorite" currency conversions to the UI.

- Add buttons to set standard amounts (10, 100, 1000) for conversion.

- Use multiple API requests to show historic values (1 day ago, 1 week ago, 1 year ago) using the historic data API.

See if you can add these yourself! If you want to go further with Tkinter, take a look at our complete TKinter tutorial.

April 24, 2025

Everyday Superpowers

Supercharge Your Enums: Cleaner Code with Hidden Features

I see a lot of articles suggesting you use enums by mostly restating the Python documentation. Unfortunately, I feel this leaves readers without crutial practical advice, which I'd like to pass on here.

This is especially true since most of the projects I've worked on, and developers I've coded with, don't seem to know this, leading to more complex code, including values and behavior that are tightly coupled in the business concepts scattered about in separate code files.

First, let's review enum fundamentals:

If you're not aware of enums, they were added to Python in version 3.4 and represent a way to communicate, among other things, a reduced set of options.

For example, you can communicate the status of tasks:

from enum import Enum

class TaskStatus(Enum):

PENDING = 'pending'

IN_PROGRESS = 'in_progress'

COMPLETED = 'completed'

CANCELLED = 'cancelled'The four lines of code beneath the `TaskStatus` class definition define the enum "members," or the specific options for this class.

This code communicates the fact that there are only four statuses a task can have.

This is very useful information, as it clearly shows the options available, but it is as deep as many developers go with enums. They don't realize how much more enums can do besides holding constant values.

For example, many don't know how easy it can be to select an enum member.

I see a lot of code that complicates selecting enum members, like this:

def change_task_status(task_id: str, status: str):

task = database.get_task_by_id(task_id)

for member in TaskStatus:

if member.value == status:

task.status = member

database.update_task(task)Instead, enum classes are smart enough to select members from their values (the things on the right side of the equal sign)[left]{You can also select members by their names (the left side of the equal sign) with square brackets, `TaskStatus['PENDING']`.}:

>>> TaskStatus('pending')

<TaskStatus.PENDING: 'pending'>This means that you could simplify the code above like this[missing]{Be aware that if the status string does not match one of the enum member's values, it'll raise a `ValueError`.}:

def change_task_status(task_id: str, status: str):

task = database.get_task_by_id(task_id)

task.status = TaskStatus(status)

database.update_task(task)But enums are not just static values. They can have behavior and data associated with them too.

The thing about enums that many people are missing is that they are objects too.

For example, I recently worked on a project that would have had this after the `TaskStatus` class to connect a description to each enum member:

STATUS_TO_DESCRIPTION_MAP = {

TaskStatus.PENDING: "Task is pending",

TaskStatus.IN_PROGRESS: "Task is in progress",

TaskStatus.COMPLETED: "Task is completed",

TaskStatus.CANCELLED: "Task is cancelled"

}But here's the thing, you can add it in the enum!

Granted, it takes a little bit of work, but here's how I would do it[init]{If we weren't using the enum's value to select a member, we could make this simpler by editing the `__init__` method instead, like they do in the docs.}:

class TaskStatus(Enum):

PENDING = "pending", 'Task is pending'

IN_PROGRESS = "in_progress", 'Task is in progress'

COMPLETED = "completed", 'Task is completed'

CANCELLED = "cancelled", 'Task is cancelled'

def __new__(cls, value, description):

obj = object.__new__(cls)

obj._value_ = value

obj.description = description

return objThis means that whenever a `TaskStatus` member is created, it keeps its original value but also adds a new attribute, description.

This means that a `TaskStatus` member would behave like this:

>>> completed = TaskStatus.COMPLETED

>>> completed.value

'completed'

>>> completed.description

'Task is completed'

>>> completed.name

'COMPLETED'On top of that, you can define methods that interact with the enum members.

Let's add what the business expects would be the next status for each member:

class TaskStatus(Enum):

PENDING = "pending", "Task is pending"

IN_PROGRESS = "in_progress", "Task is in progress"

COMPLETED = "completed", "Task is completed"

CANCELLED = "cancelled", "Task is cancelled"

def __new__(cls, value, description):

...

@property

def expected_next_status(self):

if self == TaskStatus.PENDING:

return TaskStatus.IN_PROGRESS

elif self == TaskStatus.IN_PROGRESS:

return TaskStatus.COMPLETED

else: # Task is completed or cancelled

return selfNow, each `TaskStatus` member "knows" what status is expected to be next:

>>> TaskStatus.PENDING.expected_next_status

<TaskStatus.IN_PROGRESS: 'in_progress'>

>>> TaskStatus.CANCELLED.expected_next_status

<TaskStatus.CANCELLED: 'cancelled'>You could use this in a task detail view:

def task_details(task_id: str):

task = database.get_task_by_id(task_id)

return {

"id": task.id,

"title": task.title,

"status": task.status.value,

"expected_next_status": task.status.expected_next_status.value,

}

>>> task_details("task_id_123")

{

'id': 'task_id_123',

'title': 'Sample Task',

'status': 'pending',

'expected_next_status': 'in_progress'

}Python enums are more powerful than most developers realize, and I hope you might remember these great options.

Read more...

Python Software Foundation

2025 PSF Board Election Schedule Change

Starting this year, the PSF Board Election will be held a couple of months later in the year than in years prior. The nomination period through the end of the vote will run around the August to September time frame. This is due to several factors:

- Planning the Board election while organizing PyCon US is a strain on both PSF Staff and Board Members who assist with the election.

- We received feedback that nominees would appreciate more time between the nomination cutoff and the start of the vote so that they can campaign.

- There are several US holidays in June and July (and PyCon US recovery!), which means PSF Staff will intermittently be out of the office. We want to ensure we are ready and available to assist with memberships, election questions, nominations, and everything else election-related!

A detailed election schedule will be published in June.

Consider running for the PSF Board!

In the meantime, we hope that folks in the Python community consider running for a seat on the PSF Board! Wondering who runs for the Board? People who care about the Python community, who want to see it flourish and grow, and also have a few hours a month to attend regular meetings, serve on committees, participate in conversations, and promote the Python community.

Check out our Life as Python Software Foundation Director video to learn more about what being a part of the PSF Board entails. You can also check out our FAQ’s with the PSF Board video on the PSF YouTube Channel. If you’re headed to PyCon US 2025 next month, that’s a great time to connect with current and past Board Members. We also invite you to review our Annual Impact Report for 2023 to learn more about the PSF’s mission and what we do. Last but not least, we welcome you to join the PSF Board Office Hours to connect with Board members about being a part of the PSF Board!

Everyday Superpowers

Finding the root of a project with pathlib

Every now and then I'm writing code deep in some Python project, and I realize that it would be nice to generate a file at the root of a project.

The following is the way I'm currently finding the root folder with pathlib:

if __name__ == '__main__':

from pathlib import Path

project_root = next(

p for p in Path(__file__).parents

if (p / '.git').exists()

)

project_root.joinpath('output.text').write_text(...)To explain what this does, let's start on line 4:

- `Path(__file__)` will create an absolute path to the file this Python code is in.

- `.parents` is a generator that yields the parent folders of this path, so if this path is `Path('/Users/example/projects/money_generator/src/cash/services/models.py')`, it will generate:

- `Path('/Users/example/projects/money_generator/src/cash/services')` then

- `Path('/Users/example/projects/money_generator/src/cash')`

- and so on

- `next()` given an iterable, it will retrieve the next item in it[next]{It can also return a default value if the iterable runs out of items.[docs]}

- `p for p in Path(__file__).parents` is a comprehension that will yield each parent of the current file.

- `(p / '.git').exists()` given a path `p`, it will look to see if the git folder (`.git`) exists in that folder

- So, `next(p for p in Path(__file__).parents if (p / '.git').exists())` will return the first folder that contains a git repo in the parents of the current Python file.

How do you accomplish this task?

Read more...

Zato Blog

Integrating with Jira APIs

Integrating with Jira APIs

Overview

Continuing in the series of articles about newest cloud connections in Zato 3.3, this episode covers Atlassian Jira from the perspective of invoking its APIs to build integrations between Jira and other systems.

There are essentially two use modes of integrations with Jira:

- Jira reacts to events taking place in your projects and invokes your endpoints accordingly via WebHooks. In this case, it is Jira that explicitly establishes connections with and sends requests to your APIs.

- Jira projects are queried periodically or as a consequence of events triggered by Jira using means other than WebHooks.

The first case is usually more straightforward to conceptualize - you create a WebHook in Jira, point it to your endpoint and Jira invokes it when a situation of interest arises, e.g. a new ticket is opened or updated. I will talk about this variant of integrations with Jira in a future instalment as the current one is about the other situation, when it is your systems that establish connections with Jira.

The reason why it is more practical to first speak about the second form is that, even if WebHooks are somewhat easier to reason about, they do come with their own ramifications.

To start off, assuming that you use the cloud-based version of Jira (e.g. https://example.atlassian.net), you need to have a publicly available endpoint for Jira to invoke through WebHooks. Very often, this is undesirable because the systems that you need to integrate with may be internal ones, never meant to be exposed to public networks.

Secondly, your endpoints need to have a TLS certificate signed by a public Certificate Authority and they need to be accessible on port 443. Again, both of these are something that most enterprise systems will not allow at all or it may take months or years to process such a change internally across the various corporate departments involved.

Lastly, even if a WebHook can be used, it is not always a given that the initial information that you receive in the request from a WebHook will already contain everything that you need in your particular integration service. Thus, you will still need a way to issue requests to Jira to look up details of a particular object, such as tickets, in this way reducing WebHooks to the role of initial triggers of an interaction with Jira, e.g. a WebHook invokes your endpoint, you have a ticket ID on input and then you invoke Jira back anyway to obtain all the details that you actually need in your business integration.

The end situation is that, although WebHooks are a useful concept that I will write about in a future article, they may very well not be sufficient for many integration use cases. That is why I start with integration methods that are alternative to WebHooks.

Alternatives to WebHooks

If, in our case, we cannot use WebHooks then what next? Two good approaches are:

- Scheduled jobs

- Reacting to emails (via IMAP)

Scheduled jobs will let you periodically inquire with Jira about the changes that you have not processed yet. For instance, with a job definition as below:

Now, the service configured for this job will be invoked once per minute to carry out any integration works required. For instance, it can get a list of tickets since the last time it ran, process each of them as required in your business context and update a database with information about what has been just done - the database can be based on Redis, MongoDB, SQL or anything else.

Integrations built around scheduled jobs make most sense when you need to make periodic sweeps across a large swaths of business data, these are the "Give me everything that changed in the last period" kind of interactions when you do not know precisely how much data you are going to receive.

In the specific case of Jira tickets, though, an interesting alternative may be to combine scheduled jobs with IMAP connections:

The idea here is that when new tickets are opened, or when updates are made to existing ones, Jira will send out notifications to specific email addresses and we can take advantage of it.

For instance, you can tell Jira to CC or BCC an address such as zato@example.com. Now, Zato will still run a scheduled job but instead of connecting with Jira directly, that job will look up unread emails for it inbox ("UNSEEN" per the relevant RFC).

Anything that is unread must be new since the last iteration which means that we can process each such email from the inbox, in this way guaranteeing that we process only the latest updates, dispensing with the need for our own database of tickets already processed. We can extract the ticket ID or other details from the email, look up its details in Jira and the continue as needed.

All the details of how to work with IMAP emails are provided in the documentation but it would boil down to this:

# -*- coding: utf-8 -*-

# Zato

from zato.server.service import Service

class MyService(Service):

def handle(self):

conn = self.email.imap.get('My Jira Inbox').conn

for msg_id, msg in conn.get():

# Process the message here ..

process_message(msg.data)

# .. and mark it as seen in IMAP.

msg.mark_seen()

The natural question is - how would the "process_message" function extract details of a ticket from an email?

There are several ways:

- Each email has a subject of a fixed form - "[JIRA] (ABC-123) Here goes description". In this case, ABC-123 is the ticket ID.

- Each email will contain a summary, such as the one below, which can also be parsed:

Summary: Here goes description

Key: ABC-123

URL: https://example.atlassian.net/browse/ABC-123

Project: My Project

Issue Type: Improvement

Affects Versions: 1.3.17

Environment: Production

Reporter: Reporter Name

Assignee: Assignee Name

- Finally, each email will have an "X-Atl-Mail-Meta" header with interesting metadata that can also be parsed and extracted:

X-Atl-Mail-Meta: user_id="123456:12d80508-dcd0-42a2-a2cd-c07f230030e5",

event_type="Issue Created",

tenant="https://example.atlassian.net"

The first option is the most straightforward and likely the most convenient one - simply parse out the ticket ID and call Jira with that ID on input for all the other information about the ticket. How to do it exactly is presented in the next chapter.

Regardless of how we parse the emails, the important part is that we know that we invoke Jira only when there are new or updated tickets - otherwise there would not have been any new emails to process. Moreover, because it is our side that invokes Jira, we do not expose our internal system to the public network directly.

However, from the perspective of the overall security architecture, email is still part of the attack surface so we need to make sure that we read and parse emails with that in view. In other words, regardless of whether it is Jira invoking us or our reading emails from Jira, all the usual security precautions regarding API integrations and accepting input from external resources, all that still holds and needs to be part of the design of the integration workflow.

Creating Jira connections

The above presented the ways in which we can arrive at the step of when we invoke Jira and now we are ready to actually do it.

As with other types of connections, Jira connections are created in Zato Dashboard, as below. Note that you use the email address of a user on whose behalf you connect to Jira but the only other credential is that user's API token previously generated in Jira, not the user's password.

Invoking Jira

With a Jira connection in place, we can now create a Python API service. In this case, we accept a ticket ID on input (called "a key" in Jira) and we return a few details about the ticket to our caller.

This is the kind of a service that could be invoked from a service that is triggered by a scheduled job. That is, we would separate the tasks, one service would be responsible for opening IMAP inboxes and parsing emails and the one below would be responsible for communication with Jira.

Thanks to this loose coupling, we make everything much more reusable - that the services can be changed independently is but one part and the more important side is that, with such separation, both of them can be reused by future services as well, without tying them rigidly to this one integration alone.

# -*- coding: utf-8 -*-

# stdlib

from dataclasses import dataclass

# Zato

from zato.common.typing_ import cast_, dictnone

from zato.server.service import Model, Service

# ###########################################################################

if 0:

from zato.server.connection.jira_ import JiraClient

# ###########################################################################

@dataclass(init=False)

class GetTicketDetailsRequest(Model):

key: str

@dataclass(init=False)

class GetTicketDetailsResponse(Model):

assigned_to: str = ''

progress_info: dictnone = None

# ###########################################################################

class GetTicketDetails(Service):

class SimpleIO:

input = GetTicketDetailsRequest

output = GetTicketDetailsResponse

def handle(self):

# This is our input data

input = self.request.input # type: GetTicketDetailsRequest

# .. create a reference to our connection definition ..

jira = self.cloud.jira['My Jira Connection']

# .. obtain a client to Jira ..

with jira.conn.client() as client:

# Cast to enable code completion

client = cast_('JiraClient', client)

# Get details of a ticket (issue) from Jira

ticket = client.get_issue(input.key)

# Observe that ticket may be None (e.g. invalid key), hence this 'if' guard ..

if ticket:

# .. build a shortcut reference to all the fields in the ticket ..

fields = ticket['fields']

# .. build our response object ..

response = GetTicketDetailsResponse()

response.assigned_to = fields['assignee']['emailAddress']

response.progress_info = fields['progress']

# .. and return the response to our caller.

self.response.payload = response

# ###########################################################################

Creating a REST channel and testing it

The last remaining part is a REST channel to invoke our service through. We will provide the ticket ID (key) on input and the service will reply with what was found in Jira for that ticket.

We are now ready for the final step - we invoke the channel, which invokes the service which communicates with Jira, transforming the response from Jira to the output that we need:

$ curl localhost:17010/jira1 -d '{"key":"ABC-123"}'

{

"assigned_to":"zato@example.com",

"progress_info": {

"progress": 10,

"total": 30

}

}

$

And this is everything for today - just remember that this is just one way of integrating with Jira. The other one, using WebHooks, is something that I will go into in one of the future articles.

More resources

➤ Python API integration tutorials

➤ What is an integration platform?

➤ Python Integration platform as a Service (iPaaS)

➤ What is an Enterprise Service Bus (ESB)? What is SOA?

➤ Open-source iPaaS in Python

April 23, 2025

DataWars.io

Replit Teams for Education Deprecation: All you need to know | DataWars

Real Python

Getting Started With Python IDLE

Python IDLE is the default integrated development environment (IDE) that comes bundled with every Python installation, helping you to start coding right out of the box. In this tutorial, you’ll explore how to interact with Python directly in IDLE, edit and execute Python files, and even customize the environment to suit your preferences.

By the end of this tutorial, you’ll understand that:

- Python IDLE is completely free and comes packaged with the Python language itself.

- Python IDLE is an IDE included with Python installations, designed for basic editing, execution, and debugging of Python code.

- You open IDLE through your system’s application launcher or terminal, depending on your operating system.

- You can customize IDLE to make it a useful tool for writing Python.

Understanding the basics of Python IDLE will allow you to write, test, and debug Python programs without installing any additional software.

Get Your Cheat Sheet: Click here to download your free cheat sheet that will help you find the best coding font when starting with IDLE.

Open Python’s IDLE for the First Time

Python IDLE is free and comes included in Python installations on Windows and macOS. If you’re a Linux user, then you should be able to find and download Python IDLE using your package manager. Once you’ve installed it, you can then open Python IDLE and use it as an interactive interpreter or as a file editor.

Note: IDLE stands for “Integrated Development and Learning Environment.” It’s a wordplay with IDE, which stands for Integrated Development Environment.

The procedure for opening IDLE depends on how you installed Python and varies from one operating system to another. Select your operating system below and follow the steps to open IDLE:

Once you’ve started IDLE successfully, you should see a window titled IDLE Shell 3.x.x, where 3.x.x corresponds to your version of Python:

The window that you’re seeing is the IDLE shell, which is an interactive interpreter that IDLE opens by default.

Get to Know the Python IDLE Shell

When you open IDLE, the shell is the first thing that you see. The shell is the default mode of operation for Python IDLE. It’s a blank Python interpreter window, which you can use to interact with Python immediately.

Understanding the Interactive Interpreter

The interactive interpreter is a basic Read-Eval-Print Loop (REPL). It reads a Python statement, evaluates the result of that statement, and then prints the result on the screen. Then, it loops back to read the next statement.

Note: For a full guide to the standard Python REPL, check out The Python Standard REPL: Try Out Code and Ideas Quickly.

The IDLE shell is an excellent place to experiment with small code snippets and test short lines of code.

Interacting With the IDLE Shell

When you launch Python’s IDLE, it will immediately start a Python shell for you. Go ahead and write some Python code in the shell:

Here, you used print() to output the string "Hello, from IDLE!" to your screen. This is the most basic way to interact with Python IDLE. You type in commands one at a time and Python responds with the result of each command.

Next, take a look at the menu bar. You’ll see a few options for using the shell:

Read the full article at https://realpython.com/python-idle/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

April 22, 2025

PyCoder’s Weekly

Issue #678: Namespaces, __init__, Sets, and More (April 22, 2025)

#678 – APRIL 22, 2025

View in Browser »

Namespaces in Python

In this tutorial, you’ll learn about Python namespaces, the structures that store and organize the symbolic names during the execution of a Python program. You’ll learn when namespaces are created, how they’re implemented, and how they support variable scope.

REAL PYTHON

Stop Writing __init__ Methods

Glyph recommends using dataclasses in order to avoid the use of __init__. This post shows you how and what additional work you need to do for constructor side effects.

GLYPH LEFKOWITZ

AI Agent Code Walkthrough with Python & Temporal

Join us on May 2nd at 9am PST/12pm EST for a deep dive into using Temporal’s Agentic AI use cases. We’ll begin with a live demo demonstrating how Temporal lets you recover from unexpected issues before transitioning to a live walkthrough with our Solution Architects →

TEMPORAL sponsor

Practical Uses of Sets

Sets are unordered collections of values that are great for removing duplicates, quick containment checks, and set operations.

TREY HUNNER

Articles & Tutorials

Elliptical Python Programming

This fun little article shows how certain combinations of punctuation in Python can evaluate to integers, and as a result allow you to create some rather obfuscated code. See also this associated article that breaks down exactly how it all works.

SUSAM PAL

How to Exit Loops Early With the Python Break Keyword

In this tutorial, you’ll explore various ways to use Python’s break statement to exit a loop early. Through practical examples, such as a student test score analysis tool and a number-guessing game, you’ll see how the break statement can improve the efficiency and effectiveness of your code.

REAL PYTHON

YOLO11 for Real-Time Object Detection

Ready-to-deploy, state of the art, open source computer vision apps? Sign me up!

INTEL CORPORATION sponsor

Creating a Python Dice Roll Application

In this step-by-step video course, you’ll build a dice-rolling simulator app with a minimal text-based user interface using Python. The app will simulate the rolling of up to six dice. Each individual die will have six sides.

REAL PYTHON course

Django Simple Deploy and Other DevOps Things

Talk Python interviews Eric Matthes, educator, author, and developer behind Django Simple Deploy. If you’ve ever struggled with taking that final step of getting your Django app onto a live server this tool might be for you.

KENNEDY & MATTHES podcast

Mastering DuckDB: Part 2

This is the second part in a post on how to use DuckDB when you’re used to pandas or Polars. It covers how to translate DataFrame operations into not-so-obvious SQL ones.

QUANSIGHT.ORG • Shared by Marco Gorelli

ProcessThreadPoolExecutor: When I/O Becomes CPU-bound

Learn how to combine thread and process management into a single executor class. Includes details on when you may be I/O vs CPU bound and what to do about it.

LEMON24

14 Advanced Python Features

Edward has collected a series of Python tricks and patterns that he has used over the years. They include typing overloads, generics, protocols, and more.

EDWARD LI

Python Is an Interpreted Language With a Compiler

Ever wonder where about the distinction between compiled and interpreted languages? Python straddles the boundaries, and this article explains just that.

NICOLE TIETZ-SOKOLSKAYA

Marimo: Reactive Notebooks for Python

Marimo is a new alternative to Jupyter notebooks. Talk Python interviews Akshay Agrawal and they talk all about this latest data science tool

KENNEDY, AGRAWAL

Background Tasks in Django Admin With Celery

This tutorial looks at how to run background tasks directly from Django admin using Celery.

TESTDRIVEN.IO • Shared by Michael Herman

Projects & Code

VTK: Open Source 3D Graphics and Visualization

Visualization Toolkit

KITWARE.COM

Events

Weekly Real Python Office Hours Q&A (Virtual)

April 23, 2025

REALPYTHON.COM

PyCon DE & PyData 2025

April 23 to April 26, 2025

PYCON.DE

DjangoCon Europe 2025

April 23 to April 28, 2025

DJANGOCON.EU

PyCon Lithuania 2025

April 23 to April 26, 2025

PYCON.LT

Django Girls Ho, 2025

April 25, 2025

DJANGOGIRLS.ORG

Django Girls Ho, 2025

April 26, 2025

DJANGOGIRLS.ORG

Happy Pythoning!

This was PyCoder’s Weekly Issue #678.

View in Browser »

[ Subscribe to 🐍 PyCoder’s Weekly 💌 – Get the best Python news, articles, and tutorials delivered to your inbox once a week >> Click here to learn more ]

EuroPython Society

Call for EuroPython 2026 Host Venues

Are you a community builder dreaming of bringing EuroPython to your city? The Call for Venues for EuroPython 2026 is now open! 🎉

EuroPython is the longest-running volunteer-led Python conference in the world, uniting communities across Europe. It’s a place to learn, share, connect, spark new ideas—and have fun along the way.

We aim to keep the conference welcoming and accessible by choosing venues that are affordable, easy to reach, and sustainable. As with the selection process in previous years, we’d love your help in finding the best location for future editions.

If you&aposd like to propose a location on behalf of your community, please fill out this form:

👉 https://forms.gle/ZGQA7WhTW4gc53MD6

Even if 2026 isn’t the right time, don’t hesitate to get in touch. We&aposd also like to hear from communities interested in hosting EuroPython in 2027 or later.

Questions, suggestions, or comments? Drop us a line at board@europython.eu—we’ll get back to you!

EuroPython Society Board

Real Python

MySQL Databases and Python

MySQL is one of the most popular database management systems (DBMSs) on the market today. It ranked second only to the Oracle DBMS in this year’s DB-Engines Ranking. As most software applications need to interact with data in some form, programming languages like Python provide tools for storing and accessing these data sources.

Using the techniques discussed in this video course, you’ll be able to efficiently integrate a MySQL database with a Python application. You’ll develop a small MySQL database for a movie rating system and learn how to query it directly from your Python code.

By the end of this video course, you’ll be able to:

- Identify unique features of MySQL

- Connect your application to a MySQL database

- Query the database to fetch required data

- Handle exceptions that occur while accessing the database

- Use best practices while building database applications

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Python Software Foundation

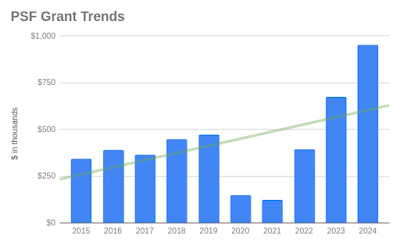

PSF Grants Program 2024 Transparency Report

The PSF’s Grants Program is a key plank in our charitable mission

to promote, protect, and advance the Python programming language and to

support and facilitate the growth of a diverse and international

community of Python programmers. After much research, input, and

analysis, the PSF is pleased to share the PSF Grants Program 2024

Transparency Report. The report includes context, numbers, analysis, and

next steps for the Program.

Similar to our PSF Grants Program 2022 & 2023 Transparency Report,

this 2024 report reflects the outcome of a significant amount of work.

There are some differences in the position we are in as we approached

the development of this report:

- The 2022 & 2023 report provided concrete areas for improvement

- During 2024, a notable amount of focused PSF Staff time was dedicated to improving our Grants Program and the processes

- The PSF received and awarded a record-breaking amount of grants in 2024, which resulted in the need to re-evaluate the Program’s goals and sustainability

The data from 2024 was truly wonderful to

see (many WOW’s and 🥳🥳 were shared among PSF Staff)– and we are so

happy to share it with the community. The PSF is also excited to share